Authors

Honghao Fu1,2, Zhiqi Shen2, Jing Jih Chin2, Hao Wang1†

1The Hong Kong University of Science and Technology (Guangzhou)

2Nanyang Technological University

†Corresponding Author

Abstract

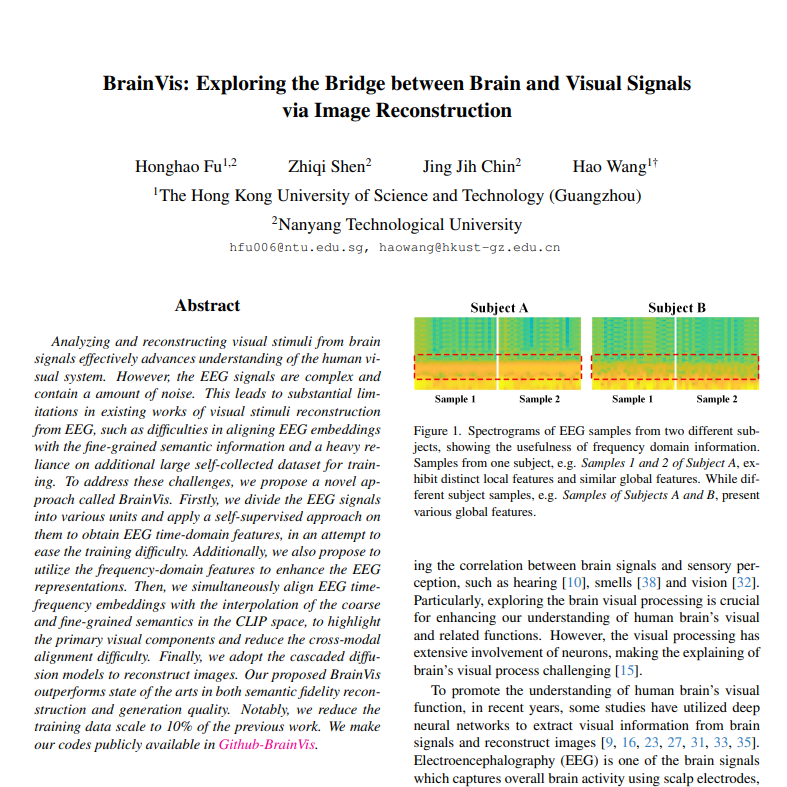

Analyzing and reconstructing visual stimuli from brain signals effectively advances understanding of the human visual system. However, the EEG signals are complex and contain a amount of noise. This leads to substantial limitations in existing works of visual stimuli reconstruction from EEG, such as difficulties in aligning EEG embeddings with the fine-grained semantic information and a heavy reliance on additional large self-collected dataset for training. To address these challenges, we propose a novel approach called BrainVis. Firstly, we divide the EEG signals into various units and apply a self-supervised approach on them to obtain EEG time-domain features, in an attempt to ease the training difficulty. Additionally, we also propose to utilize the frequency-domain features to enhance the EEG representations. Then, we simultaneously align EEG time-frequency embeddings with the interpolation of the coarse and fine-grained semantics in the CLIP space, to highlight the primary visual components and reduce the cross-modal alignment difficulty. Finally, we adopt the cascaded diffusion models to reconstruct images. Our proposed BrainVis outperforms state of the arts in both semantic fidelity reconstruction and generation quality. Notably, we reduce the training data scale to 10% of the previous work.

Contributions

- We improve the self-supervised embedding method for EEG time-domain features, eliminating the reliance on additional self-collected large-scale dataset.

- We propose the first EEG visual stimuli reconstruction method that integrates time and frequency-domain features, enhancing the EEG representations.

- BrainVis generates images with higher accuracy and better quality, and also overcomes previous limitation that was limited to only coarse-grained reconstruction.

Framework

We first aim to obtain the time and frequency features for the given EEG signals, in which the time encoder leverages self-supervised pre-training approach with masking segmentation and latent reconstruction, while the frequency encoder employs LSTM to extract features with Fast Fourier Transform (FFT). Then the time and frequency encoders are fine-tuned simultaneously with EEG classifier to obtain time-frequency dual embedding. Following this, an alignment network aligns the EEG time-frequency dual embedding with the fine-grained semantic of visual stimuli image in CLIP space, which is semantic interpolation of the label of visual stimuli image and its caption. Finally, the aligned EEG fine-grained embedding, along with the coarse-grained embedding of classification result, serve as conditions of cascaded diffusion models for multi-level semantic visual reconstruction.

Experiment Results

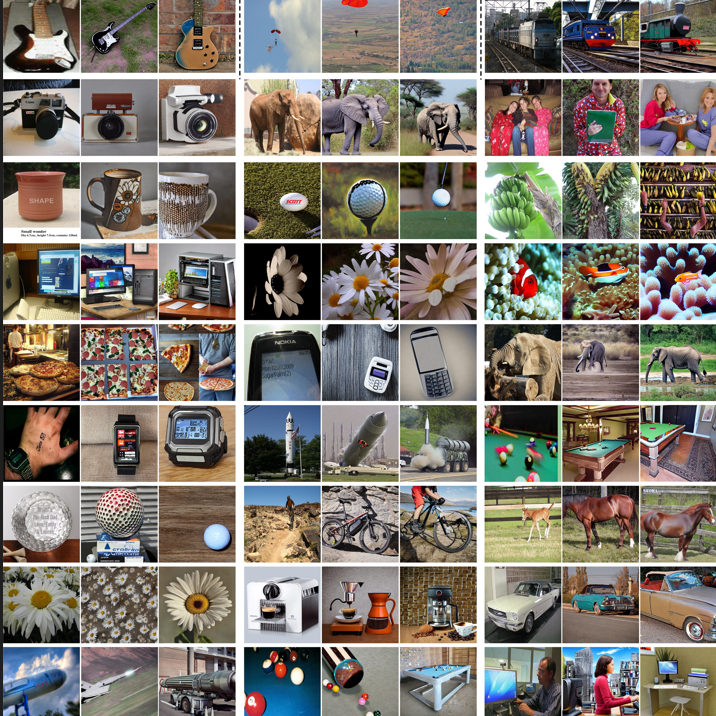

Below are visual stimuli reconstruction results (partial) and the ground truth (GT) images. We are able to not only reconstruct the correct types but also capture their fine-grained semantics to some extent, like prominent numerical, color, environmental, or behavioral features. For more results, please download the supplementary material.

Below is the comparison of reconstructed images’ quality between our method and prior works.

Below is the comparison of reconstructed images with the previews work on the same GT image.

For quantitative experiment, please download the paper.

BibTex

@article{fu2023brainvis,

title={BrianVis: Exploring the Bridge between Brain and Visual Signals via Image Reconstruction},

author={Honghao Fu and Zhiqi Shen and Jing Jih Chin and Hao Wang},

journal={arXiv preprint arXiv:2312.14871},

year={2023}

}

Broader Information

BrainVis builds upon several previous works.

- High-resolution image synthesis with latent diffusion models (CVPR 2022)

- Learning Transferable Visual Models From Natural Language Supervision (ICML 2021)

- Masked modeling conditioned diffusion model for human vision decoding (CVPR 2023)

- Deep learning human mind for automated visual classification (CVPR 2017)

- Self-Supervised Representations of Time Series with Decoupled Masked Autoencoders (2023)